-

Recruiting Humans for RLHF (Reinforcement Learning from Human Feedback)

Reinforcement Learning from Human Feedback (RLHF) has emerged as a pivotal technique for aligning AI systems, especially generative AI models like large language models (LLMs) with human expectations and values. By incorporating human preferences into the training loop, RLHF helps AI produce outputs that are more helpful, safe, and contextually appropriate.

This article provides a deep dive into RLHF: what it is, its benefits and limitations, when and how it fits into an AI product’s development, the tools used to implement it, and strategies for recruiting human participants to provide the critical feedback that drives RLHF. In particular, we will highlight why effective human recruitment (and platforms like BetaTesting) is crucial for RLHF success.

Here’s what we will explore:

- What is RLHF?

- Benefits of RLHF

- Limitations of RLHF

- When Does RLHF Occur in the AI Development Timeline?

- Tools Used for RLHF

- How to Recruit Humans for RLHF

What is RLHF?

Reinforcement learning from human feedback (RLHF) is a machine learning technique in which a “reward model” is trained with direct human feedback, then used to optimize the performance of an artificial intelligence agent through reinforcement learning – IBM

In essence, humans guide the AI by indicating which outputs are preferable, and the AI learns to produce more of those preferred outputs. This method is especially useful for tasks where the notion of “correct” output is complex or subjective.

For example, it would be impractical (or even impossible) for an algorithmic solution to define ‘funny’ in mathematical terms – but easy for humans to rate jokes generated by a large language model (LLM). That human feedback, distilled into a reward function, could then be used to improve the LLM’s joke writing abilities. In such cases, RLHF allows us to capture human notions of quality (like humor, helpfulness, or style) which are hard to encode in explicit rules.

Originally demonstrated on control tasks (like training agents to play games), RLHF gained prominence in the realm of LLMs through OpenAI’s research. Notably, the InstructGPT model was fine-tuned with human feedback to better follow user instructions, outperforming its predecessor GPT-3 in both usefulness and safety.

This technique was also key to training ChatGPT – “when developing ChatGPT, OpenAI applies RLHF to the GPT model to produce the responses users want. Otherwise, ChatGPT may not be able to answer more complex questions and adapt to human preferences the way it does today.” In summary, RLHF is a method to align AI behavior with human preferences by having people directly teach the model what we consider good or bad outputs.

Check it out: We have a full article on AI Product Validation With Beta Testing

Benefits of RLHF

Incorporating human feedback into AI training brings several important benefits, especially for generative AI systems:

- Aligns output with human expectations and values: By training on human preferences, AI models become “cognizant of what’s acceptable and ethical human behavior” and can be corrected when they produce inappropriate or undesired outputs.

In practice, RLHF helps align models with human values and user intent. For instance, a chatbot fine-tuned with RLHF is more likely to understand what a user really wants and stick within acceptable norms, rather than giving a literal or out-of-touch answer. - Produces less harmful or dangerous output: RLHF is a key technique for steering AI away from toxic or unsafe responses. Human evaluators can penalize outputs that are offensive, unsafe, or factually wrong, which trains the model to avoid them.

As a result, RLHF-trained models like InstructGPT and ChatGPT generate far fewer hateful, violent, or otherwise harmful responses compared to uninstructed models. This fosters greater trust in AI assistants by reducing undesirable outputs. - More engaging and context-aware interactions: Models tuned with human feedback provide responses that feel more natural, relevant, and contextually appropriate. Human raters often reward outputs that are coherent, helpful, or interesting.

OpenAI reported that RLHF-tuned models followed instructions better, maintained factual accuracy, and avoided nonsense or “hallucinations” much more than earlier models. In practice, this means an RLHF-enhanced AI can hold more engaging conversations, remember context, and respond in ways that users find satisfying and useful. - Ability to perform complex tasks aligned with human understanding: RLHF can unlock a model’s capability to handle nuanced or difficult tasks by teaching it the “right” approach as judged by people. For example, humans can train an AI to summarize text in a way that captures the important points, or to write code that actually works, by giving feedback on attempts.

This human-guided optimization enables LLMs with lesser parameters to perform better on challenging queries. OpenAI noted that its labelers preferred outputs from the 1.3B-parameter version of InstructGPT over even outputs from the 175B-parameter version of GPT-3. – showing that targeted human feedback can beat brute-force scale in certain tasks.

Overall, RLHF allows AI to tackle complex, open-ended tasks in ways that align with what humans consider correct or high-quality.

Limitations of RLHF

Despite its successes, RLHF also comes with notable challenges and limitations:

- Expensive and resource-intensive: Obtaining high-quality human preference data is costly and does not easily scale. Human preference data is expensive. The need to gather firsthand human input can create a costly bottleneck that limits the scalability of the RLHF process.

Training even a single model can require thousands of human feedback judgments, and employing experts or large crowds of annotators can drive up costs. This is one reason companies are researching partial automation of the feedback process (for example, AI-generated feedback as a supplement) to reduce reliance on humans. - Subjective and inconsistent feedback: Human opinions on what constitutes a “good” output can vary widely.

“Human input is highly subjective. It’s difficult, if not impossible, to establish firm consensus on what constitutes ‘high-quality’ output, as human annotators will often disagree… on what ‘appropriate’ model behavior should mean.”

In other words, there may be no single ground truth for the model to learn, and feedback can be noisy or contradictory. This subjectivity makes it hard to perfectly optimize to “human preference,” since different people prefer different things. - Risk of bad actors or trolling: RLHF assumes feedback is provided in good faith, but that may not always hold. Poorly incentivized crowd workers might give random or low-effort answers, and malicious users might try to teach the model undesirable behaviors.

Researchers have even identified “troll” archetypes who give harmful or misleading feedback. Robust quality controls and careful participant recruitment are needed to mitigate this issue (more on this in the recruitment section below). - Bias and overfitting to annotators: An RLHF-tuned model will reflect the perspectives and biases of those who provided the feedback. If the pool of human raters is narrow or unrepresentative, the model can become skewed.

For example, a model tuned only on Western annotators’ preferences might perform poorly for users from other cultures. It’s essential to use diverse and well-balanced feedback sources to avoid baking in bias.

In summary, RLHF improves AI alignment but is not a silver bullet – it demands significant human effort, good experimental design, and continuous vigilance to ensure the feedback leads to better, not worse, outcomes.

When Does RLHF Occur in the AI Development Timeline?

RLHF is typically applied after a base AI model has been built, as a fine-tuning and optimization stage in the AI product development lifecycle. By the time you’re using RLHF, you usually have a pre-trained model that’s already learned from large-scale data; RLHF then adapts this model to better meet human expectations.

The RLHF pipeline for training a large language model usually involves multiple phases:

- Supervised fine-tuning of a pre-trained model: Before introducing reinforcement learning, it’s common to perform supervised fine-tuning (SFT) on the model using example prompts and ideal responses.

This step “primes” the model with the format and style of responses we want. For instance, human trainers might provide high-quality answers to a variety of prompts (Q&A, writing tasks, etc.), and the model is tuned to imitate these answers.

SFT essentially “‘unlocks’ capabilities that GPT-3 already had, but were difficult to elicit through prompt engineering alone. In other words, it teaches the model how it should respond to users before we start reinforcement learning. - Reward model training (human preference modeling): Next, we collect human feedback on the model’s outputs to train a reward model. This usually involves showing human evaluators different model responses and having them rank or score which responses are better.

For example, given a prompt, the model might generate multiple answers; humans might prefer Answer B over Answer A, etc. These comparisons are used to train a separate neural network – the reward model – that takes an output and predicts a reward score (how favorable the output is).

Designing this reward model is tricky because asking humans to give absolute scores is hard; using pairwise comparisons and then mathematically normalizing them into a single scalar reward has proven effective. The reward model effectively captures the learned human preferences. - Policy optimization via reinforcement learning: In the final phase, the original model (often called the “policy” in RL terms) is further fine-tuned using reinforcement learning algorithms, with the reward model providing the feedback signal.

A popular choice is Proximal Policy Optimization (PPO), which OpenAI used for InstructGPT and ChatGPT. The model generates outputs, the reward model scores them, and the model’s weights are adjusted to maximize the reward. Care is taken to keep the model from deviating too much from its pre-trained knowledge (PPO includes techniques to prevent the model from “gaming” the reward by producing gibberish that the reward model happens to score highly.

Through many training iterations, this policy optimization step trains the model to produce answers that humans (as approximated by the reward model) would rate highly. After this step, we have a final model that hopefully aligns much better with human-desired outputs.

It’s worth noting that pre-training (the initial training on a broad dataset) is by far the most resource-intensive part of developing an LLM. The RLHF fine-tuning stages above are relatively lightweight in comparison – for example, OpenAI reported that the RLHF process for InstructGPT used <2% of the compute that was used to pre-train GPT-3.

RLHF is a way to get significant alignment improvements without needing to train a model from scratch or use orders of magnitude more data; it leverages a strong pre-trained foundation and refines it with targeted human knowledge.

Check it out: Top 10 AI Terms Startups Need to Know

Tools Used for RLHF

Implementing RLHF for AI models requires a combination of software frameworks, data collection tools, and evaluation methods, as well as platforms to source the human feedback providers. Key categories of tools include:

Participant recruitment platforms: A crucial “tool” for RLHF is the source of human feedback providers. You need humans (often lots of them) to supply the preferences, rankings, and demonstrations that drive the whole process. This is where recruitment platforms come in (discussed in detail in the next section).

In brief, some options include crowdsourcing marketplaces like Amazon Mechanical Turk, specialized AI data communities, or beta testing platforms to get real end-users involved. The quality of the human feedback is paramount, so choosing the right recruitment approach (and platform) significantly impacts RLHF outcomes.

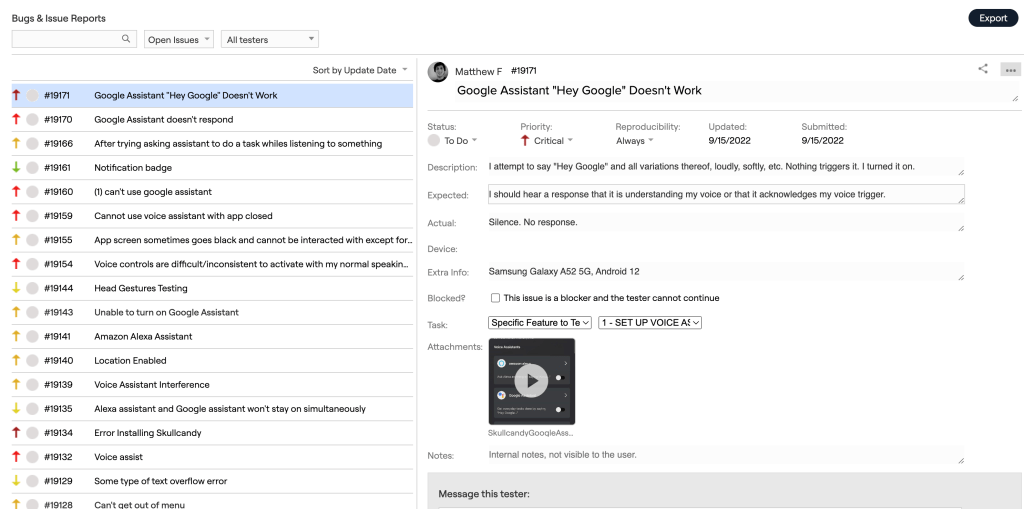

BetaTesting is a platform with a large community of vetted, real-world testers that can be tapped for collecting AI training data and feedback at scale

Other services like Pareto or Surge AI maintain expert labeler networks to provide high-accuracy RLHF annotations, while platforms like Prolific recruit diverse participants who are known for providing attentive and honest responses. Each has its pros and cons, which we’ll explore below.

RLHF training frameworks and libraries: Specialized libraries help researchers train models with RLHF algorithms. For example, Hugging Face’s TRL (Transformer Reinforcement Learning) library provides “a set of tools to train transformer language models” with methods like supervised fine-tuning, reward modeling, and PPO/other optimization algorithms.

Open-source frameworks such as DeepSpeed-Chat (by Microsoft), ColossalChat (by Colossal AI), and newer projects like OpenRLHF have emerged to facilitate RLHF at scale. These frameworks handle the complex “four-model” setup (policy, reward model, reference model, optimizer) and help with scaling to large model sizes. In practice, teams leveraging RLHF often start with an existing library rather than coding the RL loop from scratch.

Data labeling & annotation tools: Since RLHF involves collecting a lot of human feedback data (e.g. comparisons, ratings, corrections), robust annotation tools are essential. General-purpose data labeling platforms like Label Studio and Encord now offer templates or workflows specifically for collecting human preference data for RLHF. These tools provide interfaces for showing prompts and model outputs to human annotators and recording their judgments.

Many organizations also partner with data service providers: for instance, Appen (a data annotation company) has an RLHF service that leverages a carefully curated crowd of diverse human annotators with domain expertise to supply high-quality feedback. Likewise, Scale AI offers an RLHF platform with an intuitive interface and collaboration features to streamline the feedback process for labelers.

Such platforms often come with built-in quality control (consistency checks, gold standard evaluations) to ensure the human data is reliable.

Evaluation tools and benchmarks: After fine-tuning a model with RLHF, it’s critical to evaluate how much alignment and performance have improved. This is done through a mix of automated benchmarks and further human evaluation.

A notable tool is OpenAI Evals, an open-source framework for automated evaluation of LLMs. Developers can define custom evaluation scripts or use community-contributed evals (covering things like factual accuracy, reasoning puzzles, harmlessness tests, etc.) to systematically compare their RLHF-trained model against baseline models. Besides automated tests, one might run side-by-side user studies: present users with responses from the new model vs. the old model or a competitor, and ask which they prefer.

OpenAI’s launch of GPT-4, for example, reported that RLHF doubled the model’s accuracy on challenging “adversarial” questions – a result discovered through extensive evaluation. Teams also monitor whether the model avoids the undesirable outputs it was trained against (for instance, testing with provocative prompts to see if the model stays polite and safe).

In summary, evaluation tools for RLHF range from code-based benchmarking suites to conducting controlled beta tests with real people in order to validate that the human feedback truly made the model better.

How to Recruit Humans for RLHF

Obtaining the “human” in the loop for RLHF can be challenging – the task requires people who are thoughtful, diligent, and ideally somewhat knowledgeable about the context.

As one industry source notes,

“unlike typical data-labeling tasks, RLHF demands in-depth and honest feedback. The people giving that feedback need to be engaged, invested, and ready to put the time and effort into their answers.”

This means recruiting the right participants is crucial. Here are some common strategies for recruiting humans for RLHF projects, and how they stack up:

Internal recruitment (employees or existing users): One way to get reliable feedback is to recruit from within your organization or current user base. For example, a product team might have employees spend time testing a chatbot and providing feedback, or invite power-users of the product to give input.

The advantage is that these people often have domain expertise and a strong incentive to improve the AI. They might also understand the company’s values well (helpful for alignment). However, internal pools are limited in size and can introduce bias – employees might think alike, and loyal customers might not represent the broader population.

This approach works best in early stages or for niche tasks where only a subject-matter expert can evaluate the model. It’s essentially a “friends-and-family” beta test for your AI.

Social media, forums, and online communities: If you have an enthusiastic community or can tap into AI discussion forums, you may recruit volunteers. Announcing an “AI improvement program” on Reddit, Discord, or Twitter, for instance, can attract people interested in shaping AI behavior.

A notable example is the OpenAssistant project, which crowd-sourced AI assistant conversations from over 13,500 volunteers worldwide. These volunteers helped create a public dataset for RLHF, driven by interest in an open-source ChatGPT alternative. Community-driven recruitment can yield passionate contributors, but keep in mind the resulting group may skew towards tech-savvy or specific demographics (not fully representative).

Also, volunteers need motivation – many will do it for altruism or curiosity, but retention can be an issue without some reward or recognition. This approach can be excellent for open projects or research initiatives where budget is limited but community interest is high.

Paid advertising and outreach: Another route is to recruit strangers via targeted ads or outreach campaigns. For instance, if you need doctors to provide feedback for a medical AI, you might run LinkedIn or Facebook ads inviting healthcare professionals to participate in a paid study. Or more generally, ads can be used to direct people to sign-up pages to become AI model “testers.”

This method gives you control over participant criteria (through ad targeting) and can reach people outside existing platforms. However, it requires marketing effort and budget, and conversion rates can be low (not everyone who clicks an ad will follow through to do tedious feedback tasks). It’s often easier to leverage existing panels and platforms unless you need a very specific type of user that’s hard to find otherwise.

If using this approach, clarity in the ad (what the task is, why it matters, and that it’s paid or incentivized) will improve the quality of recruits by setting proper expectations.Participant recruitment platforms: In many cases, the most efficient solution is to use a platform specifically designed to find and manage participants for research or testing. Several such platforms are popular for RLHF and AI data collection:

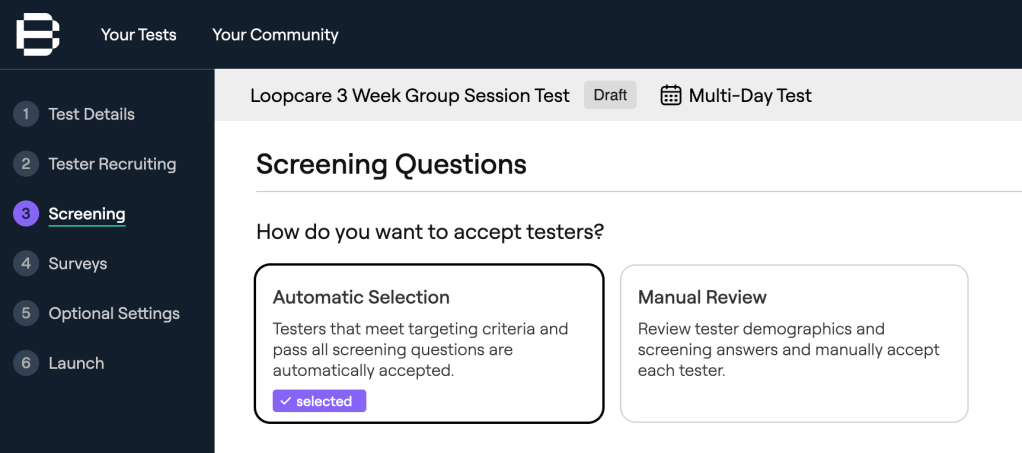

- BetaTesting: is a user research and beta-testing platform with a large pool of over 450,000 vetted participants across various demographics, devices, and locations.

We specialize in helping companies collect feedback, bug reports, and “human-powered data for AI” from real-world users. The platform allows targeting by 100+ criteria (age, gender, tech expertise, etc.) and supports multi-day or iterative test campaigns.

For RLHF projects, BetaTesting can recruit a cohort of testers who interact with your AI (e.g., try prompts and rate responses) in a structured way. Because the participants are pre-vetted and the process is managed, you often get higher-quality feedback than a general crowd marketplace. BetaTesting’s focus on real user experience means participants tend to give more contextual and qualitative feedback, which can enrich RLHF training (for instance, explaining why a response was bad, not just rating it).

In practice, BetaTesting is an excellent choice when you want high-quality, diverse feedback at scale without having to build your own community from scratch – the platform provides the people and the infrastructure to gather their input efficiently.

- Pareto (AI): is a service that offers expert data annotators on demand for AI projects, positioning itself as a premium solution for RLHF and other data needs. Their approach is more hands-on – they assemble a team of trained evaluators for your project and manage the process closely.

Pareto emphasizes speed and quality, boasting “expert-vetted data labelers” and “industry-leading accuracy” in fine-tuning LLMs. Clients define the project and Pareto’s team executes it, including developing guidelines and conducting rigorous quality assurance. This is akin to outsourcing the human feedback loop to professionals.

It can be a great option if you have the budget and need very high-quality, domain-specific feedback (for example, fine-tuning a model in finance or law with specialists, ensuring consistent and knowledgeable ratings). The trade-off is cost and possibly less transparency or control compared to running a crowdsourced approach. For many startups or labs, Pareto might be used on critical alignment tasks where errors are costly.

- Prolific: is an online research participant platform initially popular in academic research, now also used for AI data collection. Prolific maintains a pool of 200,000+ active participants who are pre-screened and vetted for quality and ethics. Researchers can easily set up studies and surveys, and Prolific handles recruiting participants that meet the study’s criteria.

For RLHF, Prolific has highlighted its capability to provide “a diverse pool of participants who give high-quality feedback on AI models” – the platform even advertises use cases like tuning AI with human feedback. The key strengths of Prolific are data quality and participant diversity. Studies (and Prolific’s own messaging) note that Prolific respondents tend to pay more attention and give more honest, detailed answers than some other crowdsourcing pools.

The platform also makes it easy to integrate with external tasks: you can, for example, host an interface where users chat with your model and rate it, and simply give Prolific participants the link. If your RLHF task requires thoughtful responses (e.g., writing a few sentences explaining preferences) and you want reliable people, Prolific is a strong choice.

The costs are higher per participant than Mechanical Turk, but you often get what you pay for in terms of quality. Prolific also ensures participants are treated and paid fairly, which is ethically important for long-term projects.

- Amazon Mechanical Turk (MTurk): is one of the oldest and largest crowd-work platforms, offering access to a vast workforce to perform micro-tasks for modest pay. Many early AI projects (and some current ones) have used MTurk to gather training data and feedback.

On the plus side, MTurk can deliver fast results at scale – if you post a simple RLHF task (like “choose which of two responses is better” with clear instructions), you could get thousands of judgments within hours, given the size of the user base. It’s also relatively inexpensive per annotation. However, the quality control burden is higher: MTurk workers vary from excellent to careless, and without careful screening and validation you may get noisy data. For nuanced RLHF tasks that require reading long texts or understanding context, some MTurk workers may rush through just to earn quick money, which is problematic.

Best practices include inserting test questions (to catch random answers), requiring a qualification test, and paying sufficiently to encourage careful work. Scalability can also hit limits if your task is very complex – fewer Turkers might opt in.

It’s a powerful option for certain types of feedback (especially straightforward comparisons or binary acceptability votes) and has been used in notable RLHF implementations. But when ultimate quality and depth of feedback are paramount, many teams now prefer curated platforms like those above. MTurk remains a useful tool in the arsenal, particularly if used with proper safeguards and for well-defined labeling tasks.

Each recruitment method can be effective, and in fact many organizations use a combination. For example, you might start with internal experts to craft an initial reward model, then use a platform like BetaTesting to get a broader set of evaluators for scaling up, and finally run a public-facing beta with actual end-users to validate the aligned model in the wild. The key is to ensure that your human feedback providers are reliable, diverse, and engaged, because the quality of the AI’s alignment is only as good as the data it learns from.

No matter which recruitment strategy you choose, invest in training your participants and maintaining quality. Provide clear guidelines and examples of good vs. bad outputs. Consider starting with a pilot: have a small group do the RLHF task, review their feedback, and refine instructions before scaling up. Continuously monitor the feedback coming in – if some participants are giving random ratings, you may need to replace them or adjust incentives.

Remember that RLHF is an iterative, ongoing process (“reinforcement” learning is never really one-and-done). Having a reliable pool of humans to draw from – for initial training and for later model updates – can become a competitive advantage in developing aligned AI products.

Check it out: We have a full article on AI in User Research & Testing in 2025: The State of The Industry

Conclusion

RLHF is a powerful approach for making AI systems more aligned with human needs, but it depends critically on human collaboration. By understanding where RLHF fits into model development and leveraging the right tools and recruitment strategies, product teams and researchers can ensure their AI not only works, but works in a way people actually want.

With platforms like BetaTesting and others making it easier to harness human insights, even smaller teams can implement RLHF to train AI models that are safer, more useful, and more engaging for their users.

As AI continues to evolve, keeping humans in the loop through techniques like RLHF will be vital for building technology that genuinely serves and delights its human audience.

Have questions? Book a call in our call calendar.

-

How To Collect User Feedback & What To Do With It.

In today’s fast-paced market, delivering products that exceed customer expectations is critical.

Beta testing provides a valuable opportunity to collect real-world feedback from real users, helping companies refine and enhance their products before launching new products or new features.

Collecting and incorporating beta testing feedback effectively can significantly improve your product, reduce development costs, and increase user satisfaction. Here’s how to systematically collect and integrate beta feedback into your product development cycle, supported by real-world examples from industry leaders.

Here’s what we will explore:

- Collect and Understand Feedback (ideally with the help of AI)

- Prioritize the Feedback

- Integrate Feedback into Development Sprints

- Validate Implemented Feedback

- Communicate Changes and Celebrate Contributions

- Ongoing Iteration and Continuous Improvement

Collect & Understand Feedback (ideally with the help of AI)

Effective beta testing hinges on gathering feedback that is not only abundant but also clear, actionable, and well-organized. To achieve this, consider the following best practices:

- Surveys and Feedback Forms: Design your feedback collection tools to guide testers through specific areas of interest. Utilize a mix of question types, such as multiple-choice for quantitative data and open-ended questions for qualitative insights.

- Video and Audio: Modern qualitative feedback often includes video and audio (e.g. selfie videos, unboxing, screen recordings, conversations with AI bots, etc).

- Encourage Detailed Context: Prompt testers to provide context for their feedback. Understanding the environment in which an issue occurred can be invaluable for reproducing and resolving problems.

- Categorize Feedback: Implement a system to categorize feedback based on themes or severity. This organization aids in identifying patterns and prioritizing responses.

All of the above are made easier due to recent advances in AI.

Read our article to learn how AI is currently used in user research.

By implementing these strategies, teams can transform raw feedback into a structured format that is easier to analyze and act upon, ultimately leading to more effective product improvements.

At BetaTesting, we got you covered. We provide the platform to make it easy to collect and understand feedback in various ways (primarily: video, surveys, and bugs) and other supportive capabilities to design and execute beta tests that can collect clear, actionable, insightful, and well-organized feedback.

Check it out: We have a full article on The Psychology of Beta Testers: What Drives Participation?

Prioritize the Feedback

Collecting beta feedback is only half the battle – prioritizing it effectively is where the real strategic value lies. With dozens (or even hundreds) of insights pouring in from testers, product teams need a clear process to separate signal from noise and determine what should be addressed, deferred, or tracked for later.

A strong prioritization system ensures that the most critical improvements, those that directly affect product quality and user satisfaction are acted upon swiftly. Here’s how to do it well:

Core Prioritization Criteria

When triaging feedback, evaluate it across several key dimensions:

- Frequency – How many testers reported the same issue? Repetition signals a pattern that could impact a broad swath of users.

- Impact – How significantly does the issue affect user experience? A minor visual bug might be low priority, while a broken core workflow could be urgent.

- Feasibility – How difficult is it to address? Balance the value of the improvement with the effort and resources required to implement it.

- Strategic Alignment – Does the feedback align with the product’s current goals, roadmap, or user segment focus?

This method ensures you’re not just reacting to noise but making product decisions grounded in value and vision.

How to Implement a Prioritization System

To emulate a structured approach, consider these tactics:

- Tag and categorize feedback: Use tags such as “critical bug,” “minor issue,” “feature request,” or “UX confusion.” This helps product teams spot clusters quickly.

- Create a prioritization matrix: Plot feedback on a 2×2 matrix, impact vs. effort. Tackle high-impact, low-effort items first (your “quick wins”), and flag high-impact/high-effort items for planning in future sprints.

- Involve cross-functional teams: Bring in engineers, designers, and marketers to discuss the tradeoffs of each item. What’s easy to fix may be a huge win, and what’s hard to fix may be worth deferring.

- Communicate decisions: If you’re closing a piece of feedback without action, let testers know why. Transparency helps maintain goodwill and future engagement.

By prioritizing feedback intelligently, you not only improve the product, you also demonstrate respect for your testers’ time and insight. It turns passive users into ongoing collaborators and ensures your team is always solving the right problems.

Integrate Feedback into Development Sprints

Incorporating user feedback into your agile processes is crucial for delivering products that truly meet user needs. To ensure that valuable insights from beta testing are not overlooked, it’s essential to systematically translate this feedback into actionable tasks within your development sprints.

At Atlassian, this practice is integral to their workflow. Sherif Mansour, Principal Product Manager at Atlassian, emphasizes the importance of aligning feedback with sprint goals:

“Your team needs to have a shared understanding of the customer value each sprint will deliver (or enable you to). Some teams incorporate this in their sprint goals. If you’ve agreed on the value and the outcome, the individual backlog prioritization should fall into place.”

By embedding feedback into sprint planning sessions, teams can ensure that user suggestions directly influence development priorities. This approach not only enhances the relevance of the product but also fosters a culture of continuous improvement and responsiveness to user needs.

To effectively integrate feedback:

- Collect and Categorize: Gather feedback from various channels and categorize it based on themes or features.

- Prioritize: Assess the impact and feasibility of each feedback item to prioritize them effectively.

- Translate into Tasks: Convert prioritized feedback into user stories or tasks within your project management tool.

- Align with Sprint Goals: Ensure that these tasks align with the objectives of upcoming sprints.

- Communicate: Keep stakeholders informed about how their feedback is being addressed.

By following these steps, teams can create a structured approach to incorporating feedback, leading to more user-centric products and a more engaged user base.

Validate Implemented Feedback

After integrating beta feedback into your product, it’s crucial to conduct validation sessions or follow-up tests with your beta testers. This step ensures that the improvements meet user expectations and effectively resolve the identified issues. Engaging with testers post-implementation helps confirm that the changes have had the desired impact and allows for the identification of any remaining concerns.

To effectively validate implemented feedback:

- Re-engage Beta Testers: Invite original beta testers to assess the changes, providing them with clear instructions on what to focus on.

- Structured Feedback Collection: Use surveys or interviews to gather detailed feedback on the specific changes made.

- Monitor Usage Metrics: Analyze user behavior and performance metrics to objectively assess the impact of the implemented changes.

- Iterative Improvements: Be prepared to make further adjustments based on the validation feedback to fine-tune the product.

By systematically validating implemented feedback, you ensure that your product evolves in alignment with user needs and expectations, ultimately leading to higher satisfaction and success in the market.

Communicate Changes and Celebrate Contributions

Transparency is key in fostering trust and engagement with your beta testers. After integrating their feedback, it’s essential to inform them about the changes made and acknowledge their contributions. This not only validates their efforts but also encourages continued participation and advocacy.

Best Practices:

- Detailed Release Notes: Clearly outline the updates made, specifying which changes were driven by user feedback. This helps testers see the direct impact of their input.

- Personalized Communication: Reach out to testers individually or in groups to thank them for specific suggestions that led to improvements.

- Public Acknowledgment: Highlight top contributors in newsletters, blogs, or social media to recognize their valuable input.

- Incentives and Rewards: Offer small tokens of appreciation, such as gift cards or exclusive access to new features, to show gratitude.

By implementing these practices, you create a positive feedback loop that not only improves your product but also builds a community of dedicated users.

Check it out: We have a full article on Giving Incentives for Beta Testing & User Research

Ongoing Iteration and Continuous Improvement

Beta testing should be viewed as an ongoing process rather than a one-time event. Continuous engagement with users allows for regular feedback, leading to iterative improvements that keep your product aligned with user needs and market trends.

Strategies for Continuous Improvement:

- Regular Feedback Cycles: Schedule periodic check-ins with users to gather fresh insights and identify new areas for enhancement.

- Agile Development Integration: Incorporate feedback into your agile workflows to ensure timely implementation of user suggestions.

- Data-Driven Decisions: Use analytics to monitor user behavior and identify patterns that can inform future updates.

- Community Building: Foster a community where users feel comfortable sharing feedback and suggestions, creating a collaborative environment for product development.

By embracing a culture of continuous improvement, you ensure that your product evolves in step with user expectations, leading to sustained success and user satisfaction.

Seeking only positive feedback and cheerleaders is one of the mistakes companies make. We explore them in depth here in this article, Top 5 Mistakes Companies Make In Beta Testing (And How to Avoid Them)

Conclusion

Successfully managing beta feedback isn’t just about collecting bug reports, it’s about closing the loop. When companies gather actionable insights, prioritize them thoughtfully, and integrate them into agile workflows, they don’t just improve their product, they build trust, loyalty, and long-term user engagement.

The most effective teams treat beta testers as partners, not just participants. They validate changes with follow-up sessions, communicate updates transparently, and celebrate tester contributions openly. This turns casual users into invested advocates who are more likely to stick around, spread the word, and continue offering valuable feedback.

Whether you’re a startup launching your first app or a mature product team refining your roadmap, the formula is clear: structured feedback + implementation + open communication = better products and stronger communities. When beta testing is done right, everyone wins.

Have questions? Book a call in our call calendar.

-

Building a Beta Tester Community: Strategies for Long-Term Engagement

In today’s fast-paced and competitive digital market, user feedback is an invaluable asset. Beta testing serves as the critical bridge between product development and market launch, enabling real people to interact with products and offer practical insights.

However, beyond simple pre-launch testing lies an even greater opportunity: a dedicated beta tester community for ongoing testing and engagement. By carefully nurturing and maintaining such a community, product teams can achieve continuous improvement, enhanced user satisfaction, and sustained product success.

Here’s is what we will explore:

- The Importance of a Beta Tester Community

- Laying the Foundation

- Strategies for Sustaining Long-Term Engagement

- Leveraging Technology and Platforms

- Challenges and Pitfalls to Avoid

- Case Studies and Real-World Examples

The Importance of a Beta Tester Community

Continuous Feedback Loop with Real Users

One of the most substantial advantages of cultivating a beta tester community is the creation of a continuous feedback loop. A community offers direct, ongoing interaction with real users, providing consistent insights into product performance and evolving user expectations. Unlike one-off testing, a community ensures a constant flow of relevant user feedback, enabling agile, responsive, and informed product development.

Resolving Critical Issues Before Public Release

Beta tester communities act as an early detection system for issues that internal teams may miss. Engaged testers often catch critical bugs, usability friction, or unexpected behaviors early in the product lifecycle. By addressing these issues before they reach the broader public, companies avoid negative reviews, customer dissatisfaction, and costly post-launch fixes. Early resolutions enhance a product’s reputation for reliability and stability.

Fostering Product Advocates

A vibrant community of beta testers doesn’t just provide insights, they become passionate advocates of your product. Testers who see their feedback directly influence product development develop a personal stake in its success. Their enthusiasm translates naturally into authentic, influential word-of-mouth recommendations, creating organic marketing momentum that paid advertising struggles to match.

Reducing Costs and Development Time

Early discovery of usability issues through community-driven testing significantly reduces post-launch support burdens. Insightful, targeted feedback allows product teams to focus resources on high-impact features and necessary improvements, optimizing development efficiency. This targeted approach not only saves time but also controls development costs effectively.

Laying the Foundation

Build Your Community

Generating Interest – To build a robust beta tester community, begin by generating excitement around your product. Engage your existing customers, leverage social media, industry forums, or targeted newsletters to announce beta opportunities. Clearly articulate the benefits of participation, such as exclusive early access, direct influence on product features, and recognition as a valued contributor.

Inviting the Right People – Quality matters more than quantity. Invite users who reflect your intended customer base, those enthusiastic about your product and capable of providing clear, constructive feedback. Consider implementing screening questionnaires or short interviews to identify testers who demonstrate commitment, effective communication skills, and genuine enthusiasm for your product’s domain.

Managing the Community – Effective community management is crucial. Assign dedicated personnel who actively engage with testers, provide timely responses, and foster an open and collaborative environment. Transparent and proactive management builds trust and encourages ongoing participation, turning occasional testers into long-term, committed community members.Set Clear Expectations and Guidelines

Set clear expectations from the outset. Clearly communicate the scope of tests, feedback requirements, and timelines. Providing structured guidelines ensures testers understand their roles, reduces confusion, and results in more relevant, actionable feedback.

Design an Easy Onboarding Process

An easy and seamless onboarding process significantly improves tester participation and retention. Provide clear instructions, necessary resources, and responsive support channels. Testers who can quickly and painlessly get started are more likely to stay engaged over time.

Strategies for Sustaining Long-Term Engagement

Communication and Transparency

Transparent, regular communication is the foundation of sustained engagement. Provide frequent updates on product improvements, clearly demonstrating how tester feedback shapes product development. This openness builds trust, encourages active participation, and fosters a sense of meaningful contribution among testers.

Recognition and Rewards

Acknowledging tester efforts goes a long way toward sustaining engagement. Celebrate their contributions publicly, offer exclusive early access to new features, or provide tangible rewards such as gift cards or branded merchandise. Recognition signals genuine appreciation, motivating testers to remain involved long-term.

Check it out: We have a full article on Giving Incentives for Beta Testing & User Research

Gamification and Community Challenges

Gamification elements, such as leaderboards, badges, or achievements can significantly boost tester enthusiasm and involvement. Friendly competitions or community challenges create a sense of camaraderie, fun, and ongoing engagement, transforming routine feedback sessions into vibrant, interactive experiences.

Continuous Learning and Support

Providing educational materials, such as tutorials, webinars, and FAQ resources, enriches tester experiences. Supporting their continuous learning helps them understand the product more deeply, allowing them to provide even more insightful and detailed feedback. Reliable support channels further demonstrate your commitment to tester success, maintaining high morale and sustained involvement.

Leveraging Technology and Platforms

Choosing the right technology and platforms is vital for managing an effective beta tester community. Dedicated beta-testing platforms such as BetaTesting streamline tester recruitment, tester management, feedback collection, and issue tracking.

Additionally, communication tools like community forums, Discord, Slack, or in-app messaging enable smooth interactions among testers and product teams. Leveraging such technology ensures efficient communication, organized feedback, and cohesive community interactions, significantly reducing administrative burdens.

Leverage Tools and Automation is one of the 8 tips for managing beta testers You can read the full article here: 8 Tips for Managing Beta Testers to Avoid Headaches & Maximize Engagement

Challenges and Pitfalls to Avoid

Building and managing a beta community isn’t without challenges. Common pitfalls include neglecting timely communication, failing to implement valuable tester feedback, and providing insufficient support.

Avoiding these pitfalls involves clear expectations, proactive and transparent communication, rapid response to feedback, and nurturing ongoing relationships. Understanding these potential challenges and addressing them proactively helps maintain a thriving, engaged tester community.

Check it out: We have a full article on Top 5 Mistakes Companies Make In Beta Testing (And How to Avoid Them)

How to get started on your own

InfoQ’s Insights on Community-Driven Testing

InfoQ highlights that creating an engaged beta community need not involve large investments upfront. According to InfoQ, a practical approach involves initiating one off limited-time beta testing programs, then gradually transitioning towards an ongoing community-focused engagement model. As they emphasize:

“Building a community is like building a product; you need to understand the target audience and the ultimate goal.”

This perspective reinforces the importance of understanding your community’s needs and objectives from the outset.

Conclusion

A dedicated beta tester community isn’t merely a beneficial addition, it is a strategic advantage that significantly enhances product development and market positioning.

A well-nurtured community provides continuous, actionable feedback, identifies critical issues early, and fosters enthusiastic product advocacy. It reduces costs, accelerates development timelines, and boosts long-term customer satisfaction.

By carefully laying the foundation, employing effective engagement strategies, leveraging appropriate technological tools, and learning from successful real-world examples, startups and product teams can cultivate robust tester communities. Ultimately, this investment in community building leads to products that resonate deeply, perform exceptionally, and maintain sustained relevance and success in the marketplace.

Have questions? Book a call in our call calendar.

-

Top 10 AI Terms Startups Need to Know

This article breaks down the top 10 AI terms that every startup product manager, user researcher, engineer, and entrepreneur should know.

Artificial Intelligence (AI) is beginning to revolutionize products across industries but AI terminology is new to most of us, and can be overwhelming.

We’ll define some of the most important terms, explain what they mean, and give practical examples of how the apply in a startup context. By the end, you’ll have a clearer grasp of key AI concepts that are practically important for early-stage product development – from generative AI breakthroughs to the fundamentals of machine learning.

Here’s are the 10 AI terms:

- Artificial Intelligence (AI)

- Machine Learning (ML)

- Neural Networks

- Deep Learning

- Natural Language Processing (NLP)

- Computer Vision (CV)

- Generative AI

- Large Language Models (LLMs)

- Supervised Learning

- Fine-Tuning

1. Artificial Intelligence (AI)

In simple terms, Artificial Intelligence is the broad field of computer science dedicated to creating systems that can perform tasks normally requiring human intelligence.

AI is about making computers or machines “smart” in ways that mimic human cognitive abilities like learning, reasoning, problem-solving, and understanding language. AI is an umbrella term encompassing many subfields (like machine learning, computer vision, etc.), and it’s become a buzzword as new advances (especially since 2022) have made AI part of everyday products. Importantly, AI doesn’t mean a machine is conscious or infallible – it simply means it can handle specific tasks in a “smart” way that previously only humans could.

Check it out: We have a full article on AI Product Validation With Beta Testing

Let’s put it into practice, imagine a startup building an AI-based customer support tool. By incorporating AI, the tool can automatically understand incoming user questions and provide relevant answers or route the query to the right team. Here the AI system might analyze the text of questions (simulating human understanding) and make decisions on how to respond, something that would traditionally require a human support agent. Startups often say they use AI whenever their software performs a task like a human – whether it’s comprehending text, recognizing images, or making decisions faster and at scale.

According to an IBM explanation,

“Any system capable of simulating human intelligence and thought processes is said to have ‘Artificial Intelligence’ (AI).”

In other words, if your product features a capability that lets a machine interpret or decide in a human-like way, it falls under AI.

2. Machine Learning (ML)

Machine Learning is a subset of AI where computers improve at tasks by learning from data rather than explicit programming. In machine learning, developers don’t hand-code every rule. Instead, they feed the system lots of examples and let it find patterns. It’s essentially teaching the computer by example.

A definition by IBM says:

“Machine learning (ML) is a branch of artificial intelligence (AI) focused on enabling computers and machines to imitate the way that humans learn, to perform tasks autonomously, and to improve their performance and accuracy through experience and exposure to more data.”

This means an ML model gets better as it sees more data – much like a person gets better at a skill with practice. Machine learning powers things like spam filters (learning to recognize junk emails by studying many examples) and recommendation engines (learning your preferences from past behavior). It’s the workhorse of modern AI, providing the techniques (algorithms) to achieve intelligent behavior by learning from datasets.

Real world example: Consider a startup that wants to predict customer churn (which users are likely to leave the service). Using machine learning, the team can train a model on historical user data (sign-in frequency, past purchases, support tickets, etc.) where they know which users eventually canceled. The ML model will learn patterns associated with churning vs. staying. Once trained, it can predict in real-time which current customers are at risk, so the startup can take proactive steps.

Unlike a hard-coded program with fixed rules, the ML system learns what signals matter (perhaps low engagement or specific feedback comments), and its accuracy improves as more data (examples of user behavior) come in. This adaptive learning approach is why machine learning is crucial for startups dealing with dynamic, data-rich problems – it enables smarter, data-driven product features.

3. Neural Networks

A Neural Network is a type of machine learning model inspired by the human brain, composed of layers of interconnected “neurons” that process data and learn to make decisions.

Neural networks consist of virtual neurons organized in layers:

- Input layer (taking in data)

- Hidden layers (processing the data through weighted connections)

- Output layer (producing a result or prediction).

Each neuron takes input, performs a simple calculation, and passes its output to neurons in the next layer.

Through training, the network adjusts the strength (weights) of all these connections, allowing it to learn complex patterns. A clear definition is: “An Artificial Neural Network (ANN) is a computational model inspired by the structure and functioning of the human brain.”

These models are incredibly flexible – with enough data, a neural network can learn to translate languages, recognize faces in photos, or drive a car. Simpler ML models might look at data features one by one, but neural nets learn many layers of abstraction (e.g. in image recognition, early layers might detect edges, later layers detect object parts, final layer identifies the object).

Learn more about: What is a Neural Network from AWS

Example: Suppose a startup is building an app that automatically tags images uploaded by users (e.g., detecting objects or people in photos for an album). The team could use a neural network trained on millions of labeled images. During training, the network’s neurons learn to activate for certain visual patterns – some neurons in early layers react to lines or colors, middle layers might respond to shapes or textures, and final layers to whole objects like “cat” or “car.”

After sufficient training, when a user uploads a new photo, the neural network processes the image through its layers and outputs tags like “outdoor”, “dog”, “smiling person” with confidence scores. This enables a nifty product feature: automated photo organization.

For the startup, the power of neural networks is that they can discover patterns on their own from raw data (pixels), which is far more scalable than trying to hand-code rules for every possible image scenario.

4. Deep Learning

Deep Learning is a subfield of machine learning that uses multi-layered neural networks (deep neural networks) to learn complex patterns from large amounts of data.

The term “deep” in deep learning refers to the many layers in these neural networks. A basic neural network might have one hidden layer, but deep learning models stack dozens or even hundreds of layers of neurons, which allows them to capture extremely intricate structures in data. Deep learning became practical in the last decade due to big data and more powerful computers (especially GPUs).

A helpful definition from IBM states:

“Deep learning is a subset of machine learning that uses multilayered neural networks, called deep neural networks, to simulate the complex decision-making power of the human brain.”

In essence, deep learning can automatically learn features and representations from raw data. For example, given raw audio waveforms, a deep learning model can figure out low-level features (sounds), mid-level (phonetics), and high-level (words or intent) without manual feature engineering.

This ability to learn directly from raw inputs and improve with scale is why deep learning underpins most modern AI breakthroughs – from voice assistants to self-driving car vision. However, deep models often require a lot of training data and computation. The payoff is high accuracy and the ability to tackle tasks that were previously unattainable for machines.

Many startups leverage deep learning for tasks like natural language understanding, image recognition, or recommendation systems. For instance, a streaming video startup might use deep learning to recommend personalized content. They could train a deep neural network on user viewing histories and content attributes: the network’s layers learn abstract notions of user taste.

Early layers might learn simple correlations (e.g., a user watches many comedies), while deeper layers infer complex patterns (perhaps the user likes “light-hearted coming-of-age” stories specifically). When a new show is added, the model can predict which segments of users will love it.

The deep learning model improves as more users and content data are added, enabling the startup to serve increasingly accurate recommendations. This kind of deep recommendation engine is nearly impossible to achieve with manual rules, but a deep learning system can continuously learn nuanced preferences from millions of data points.

5. Natural Language Processing (NLP)

Natural Language Processing enables computers to understand, interpret, and generate human language (text or speech). NLP combines linguistics and machine learning so that software can work with human languages in a smart way. This includes tasks like understanding the meaning of a sentence, translating between languages, recognizing names or dates in text, summarizing documents, or holding a conversation.

Essentially, NLP is what allows AI to go from pure numbers to words and sentences – it bridges human communication and computer processing.

Techniques in NLP range from statistical models to deep learning (today’s best NLP systems often use deep learning, especially with large language models). NLP can be challenging because human language is messy, ambiguous, and full of context. However, progress in NLP has exploded, and modern models can achieve tasks like answering questions or detecting sentiment with impressive accuracy. For a product perspective, if your application involves text or voice from users, NLP is how you make sense of it.

Imagine a startup that provides an AI writing assistant for marketing teams. This product might let users input a short prompt or some bullet points, and the AI will draft a well-written blog post or ad copy. Under the hood, NLP is doing the heavy lifting: the system needs to interpret the user’s prompt (e.g., understand that “social media campaign for a new coffee shop” means the tone should be friendly and the content about coffee), and then generate human-like text for the campaign.

NLP is also crucial for startups doing things like chatbots for customer service (the bot must understand customer questions and produce helpful answers), voice-to-text transcription (converting spoken audio to written text), or analyzing survey responses to gauge customer sentiment.

By leveraging NLP techniques, even a small startup can deploy features like language translation or sentiment analysis that would have seemed sci-fi just a few years ago. In practice, that means startups can build products where the computer actually understands user emails, chats, or voice commands instead of treating them as opaque strings of text.

Check it out: We have a full article on AI-Powered User Research: Fraud, Quality & Ethical Questions

6. Computer Vision (CV)

Just as NLP helps AI deal with language, computer vision helps AI make sense of what’s in an image or video. This involves tasks like object detection (e.g., finding a pedestrian in a photo), image classification (recognizing that an image is a cat vs. a dog), face recognition, and image segmentation (outlining objects in an image).

Computer vision combines advanced algorithms and deep learning to achieve what human vision does naturally – identifying patterns and objects in visual data. Modern computer vision often uses convolutional neural networks (CNNs) and other deep learning models specialized for images.

These models can automatically learn to detect visual features (edges, textures, shapes) and build up to recognizing complete objects or scenes. With ample data (millions of labeled images) and training, AI vision systems can sometimes even outperform humans in certain recognition tasks (like spotting microscopic defects or scanning thousands of CCTV feeds simultaneously).

As Micron describes,

“Computer vision is a field of AI that focuses on enabling computers to interpret and understand visual data from the world, such as images and videos.”

For startups, this means your application can analyze and react to images or video – whether it’s verifying if a user uploaded a valid ID, counting inventory from a shelf photo, or powering the “try-on” AR feature in an e-commerce app – all thanks to computer vision techniques.

Real world example: Consider a startup working on an AI-powered quality inspection system for manufacturing. Traditionally, human inspectors look at products (like circuit boards or smartphone screens) to find defects. With computer vision, the startup can train a model on images of both perfect products and defective ones.

The AI vision system learns to spot anomalies – perhaps a scratch, misaligned component, or wrong color. On the assembly line, cameras feed images to the model which flags any defects in real time, allowing the factory to remove faulty items immediately. This dramatically speeds up quality control and reduces labor costs.Another example: a retail-focused startup might use computer vision in a mobile app that lets users take a photo of an item and search for similar products in an online catalog (visual search). In both cases, computer vision capabilities become a product feature – something that differentiates the startup’s offering by leveraging cameras and images.

The key is that the AI isn’t “seeing” in the conscious way humans do, but it can analyze pixel patterns with such consistency and speed that it approximates a form of vision tailored to the task at hand.

7. Generative AI

Generative AI refers to AI systems that can create new content (text, images, audio, etc.) that is similar to what humans might produce, essentially generating original outputs based on patterns learned from training data.

Unlike traditional discriminative AI (which might classify or detect something in data), generative AI actually generates something new. This could mean writing a paragraph of text that sounds like a human wrote it, creating a new image from a text description, composing music, or even designing synthetic data.

This field has gained huge attention recently because of advances in models like OpenAI’s GPT series (for text) and image generators like DALL-E or Stable Diffusion (for images). These models are trained on vast datasets (e.g., GPT on billions of sentences, DALL-E on millions of images) and learn the statistical patterns of the content. Then, when given a prompt, they produce original content that follows those patterns.

This encapsulates the core idea that a generative AI doesn’t just analyze data – it uses AI smarts to produce new writing, images, or other media, making it an exciting tool for startups in content-heavy arenas. The outputs aren’t just regurgitated examples from training data – they’re newly synthesized, which is why sometimes these models can even surprise us with creative or unexpected results.

Generative AI opens possibilities for automation in content creation and design, but it also comes with challenges (like the tendency of language models to sometimes produce incorrect information, known as “hallucinations”). Still, the practical applications are vast and highly relevant to startups looking to do more with less human effort in content generation.

Example: Many early-stage companies are already leveraging generative AI to punch above their weight. For example, a startup might offer a copywriting assistant that generates marketing content (blog posts, social media captions, product descriptions) with minimal human input. Instead of a human writer crafting each piece from scratch, the generative AI model (like GPT-4 or similar) can produce a draft that the marketing team just edits and approves. This dramatically speeds up content production.

Another startup example: using generative AI for design prototyping, where a model generates dozens of design ideas (for logos, app layouts, or even game characters) from a simple brief. There are also startups using generative models to produce synthetic training data (e.g., generating realistic-but-fake images of people to train a vision model without privacy issues).

These examples show how generative AI can be a force multiplier – it can create on behalf of the team, allowing startups to scale creative and development tasks in a way that was previously impossible. However, product managers need to understand the limitations too: generative models might require oversight, have biases from training data, or produce outputs that need fact-checking (especially in text).

So, while generative AI is powerful, using it effectively in a product means knowing both its capabilities and its quirks.

8. Large Language Models (LLMs)

LLMs are a specific (and wildly popular) instance of generative AI focused on language. They’re called “large” because of their size – often measured in billions of parameters (weights) – which correlates with their ability to capture subtle patterns in language. Models like GPT-3, GPT-4, BERT, or Google’s PaLM are all LLMs.

After training on everything from books to websites, an LLM can carry on a conversation, answer questions, write code, summarize documents, and more, all through a simple text prompt interface. These models use architectures like the Transformer (an innovation that made training such large models feasible by handling long-range dependencies in text effectively).

However, they don’t truly “understand” like a human – they predict likely sequences of words based on probability. This means they can sometimes produce incorrect or nonsensical answers with great confidence (again, the hallucination issue). Despite that, their utility is enormous, and they’re getting better rapidly. For a startup, an LLM can be thought of as a powerful text-processing engine that can be integrated via an API or fine-tuned for specific needs.

Large Language Models are very large neural network models trained on massive amounts of text, enabling them to understand language and generate human-like text. These models, such as GPT, use deep learning techniques to perform tasks like text completion, translation, summarization, and question-answering.

A common way startups use LLMs is by integrating with services like OpenAI’s API to add smart language features. For example, a customer service platform startup might use an LLM to suggest reply drafts to support tickets. When a support request comes in, the LLM analyzes the customer’s message and generates a suggested response for the support agent, saving time.

Another scenario: an analytics startup can offer a natural language query interface to a database – the user types a question in English (“What was our highest-selling product last month in region X?”) and the LLM interprets that and translates it into a database query or directly fetches an answer if it has been connected to the data.

This turns natural language into an actual tool for interacting with software. Startups also fine-tune LLMs on proprietary data to create specialized chatbots (for instance, a medical advice bot fine-tuned on healthcare texts, so it speaks the language of doctors and patients).LLMs, being generalists, provide a flexible platform; a savvy startup can customize them to serve as content generators, conversational agents, or intelligent parsers of text. The presence of such powerful language understanding “as a service” means even a small team can add fairly advanced AI features without training a huge model from scratch – which is a game changer.

9. Supervised Learning

Supervised Learning is a machine learning approach where a model is trained on labeled examples, meaning each training input comes with the correct output, allowing the model to learn the relationship and make predictions on new, unlabeled data.

Supervised learning is like learning with a teacher. We show the algorithm input-output pairs – for example, an image plus the label of what’s in the image (“cat” or “dog”), or a customer profile plus whether they clicked a promo or not – and the algorithm tunes itself to map inputs to outputs. It’s by far the most common paradigm for training AI models in industry because if you have the right labeled dataset, supervised learning tends to produce highly accurate models for classification or prediction tasks.

A formal description from IBM states:

“Supervised learning is defined by its use of labeled datasets to train algorithms to classify data or predict outcomes accurately.”

Essentially, the model is “supervised” by the labels: during training it makes a prediction and gets corrected by seeing the true label, gradually learning from its mistakes.

Most classic AI use cases are supervised: spam filtering (train on emails labeled spam vs. not spam), fraud detection (transactions labeled fraudulent or legit), image recognition (photos labeled with what’s in them), etc. The downside is it requires obtaining a quality labeled dataset, which can be time-consuming or costly (think of needing thousands of hand-labeled examples). But many startups find creative ways to gather labeled data, or they rely on pre-trained models (which were originally trained in a supervised manner on big generic datasets) and then fine-tune them for their task.

Real world example: Consider a startup offering an AI tool to vet job applications. They want to predict which applicants will perform well if hired. They could approach this with supervised learning: gather historical data of past applicants including their resumes and some outcome measure (e.g., whether they passed interviews, or their job performance rating after one year – that’s the label).

Using this, the startup trains a model to predict performance from a resume. Each training example is a resume (input) with the known outcome (output label). Over time, the model learns which features of a resume (skills, experience, etc.) correlate with success. Once trained, it can score new resumes to help recruiters prioritize candidates.Another example: a fintech startup might use supervised learning to predict loan default. They train on past loans, each labeled as repaid or defaulted, so the model learns patterns indicating risk. In both cases, the key is the startup has (or acquires) a dataset with ground truth labels.

Supervised learning then provides a powerful predictive tool that can drive product features (like automatic applicant ranking or loan risk scoring). The better the labeled data (quality and quantity), the better the model usually becomes – which is why data is often called the new oil, and why even early-stage companies put effort into data collection and labeling strategies.10. Fine-Tuning

Fine-tuning has become a go-to strategy in modern AI development, especially for startups. Rather than training a complex model from scratch (which can be like reinventing the wheel, not to mention expensive in data and compute), you start with an existing model that’s already learned a lot from a general dataset, and then train it a bit more on your niche data. This adapts the model’s knowledge to your context.

For example, you might take a large language model that’s learned general English and fine-tune it on legal documents to make a legal assistant AI. Fine-tuning is essentially a form of transfer learning – leveraging knowledge from one task for another. By fine-tuning, the model’s weights get adjusted slightly to better fit the new data, without having to start from random initialization. This typically requires much less data and compute than initial training, because the model already has a lot of useful “general understanding” built-in.

Fine-tuning can be done for various model types (language models, vision models, etc.), and there are even specialized efficient techniques (like Low-Rank Adaptation, a.k.a. LoRA) to fine-tune huge models with minimal resources.

For startups, fine-tuning is great because you can take open-source models or API models and give them your unique spin or proprietary knowledge. It’s how a small company can create a high-performing specialized AI without a billion-dollar budget.

To quote IBM’s definition, “Fine-tuning in machine learning is the process of adapting a pre-trained model for a specific tasks or use cases.” This highlights that fine-tuning is all about starting from something that already works and making it work exactly for your needs. For a startup, fine-tuning can mean the difference between a one-size-fits-all AI and a bespoke solution that truly understands your users or data. It’s how you teach a big-brained AI new tricks without having to build the brain from scratch.

Real world example: Imagine a startup that provides a virtual personal trainer app. They decide to have an AI coach that can analyze user workout videos and give feedback on form. Instead of collecting millions of workout videos and training a brand new computer vision model, the startup could take a pre-trained vision model (say one that’s trained on general human pose estimation from YouTube videos) and fine-tune it on a smaller dataset of fitness-specific videos labeled with “correct” vs “incorrect” form for each exercise.

By fine-tuning, the model adapts to the nuances of, say, a perfect squat or plank. This dramatically lowers the barrier – maybe they only need a few thousand labeled video clips instead of millions, because the base model already understood general human movement.

Conclusion

Embracing AI in your product doesn’t require a PhD in machine learning, but it does help to grasp these fundamental terms and concepts. From understanding that AI is the broad goal, machine learning is the technique, neural networks and deep learning are how we achieve many modern breakthroughs, to leveraging NLP for text, computer vision for images, and generative AI for creating new content – – these concepts empower you to have informed conversations with your team and make strategic product decisions. Knowing about large language models and their quirks, the value of supervised learning with good data, and the shortcut of fine-tuning gives you a toolkit to plan AI features smartly.

The world of AI is evolving fast (today’s hot term might be an industry standard tomorrow), but with the ten terms above, you’ll be well-equipped to navigate the landscape and build innovative products that harness the power of artificial intelligence. As always, when integrating AI, start with a clear problem to solve, use these concepts to choose the right approach, and remember to consider ethics and user experience. Happy building – may your startup’s AI journey be a successful one!

Have questions? Book a call in our call calendar.

-

8 Tips for Managing Beta Testers to Avoid Headaches & Maximize Engagement

What do you get when you combine real-world users, unfinished software, unpredictable edge cases, and tight product deadlines? Chaos. Unless you know how to manage it. Beta testing isn’t just about collecting feedback; it’s about orchestrating a high-stakes collaboration between your team and real-world users at the exact moment your product is at its most vulnerable.

Done right, managing beta testers is part psychology, part logistics, and part customer experience. This article dives into how leading companies, from Tesla to Slack – turn raw user feedback into product gold. Whether you’re wrangling a dozen testers or a few thousand, these tips will help you keep the feedback flowing, the chaos controlled, and your sanity intact.

Here’s are the 8 tips:

- Clearly Define Expectations, Goals, and Incentives

- Choose the Right Beta Testers

- Effective Communication is Key

- Provide Simple and Clear Feedback Channels

- Let Tester Know they are Heard. Encourage Tester Engagement and Motivation

- Act on Feedback and Close the Loop

- Anticipate and Manage Common Challenges

- Leverage Tools and Automation

1. Clearly Define Expectations, Goals, and Incentives

Clearly articulated goals set the stage for successful beta testing. First, your team should understand the goals so you design the test correctly.

Testers must also understand not just what they’re doing, but why it matters. When goals are vague, participation drops, feedback becomes scattered, and valuable insights fall through the cracks.

Clarity starts with defining what success looks like for the beta: Is it catching bugs? Testing specific features? Validating usability? Then, if you have specific expectations or requirements for testers, ensure you are making those clear: descrbie expectations around participation, how often testers should engage, what kind of feedback is helpful, how long the test will last, and what incentives they’ll get. Offering the right incentives that match with testers time and effort can significantly enhance the recruitment cycle and the quality of feedback obtained.

Defining the test requirements for testers doesn’t mean you need to tell the testers exactly what to do. It just means that you need to ensure that you are communicating the your expectations and requirements to the testers.

Check it out: We have a full article on Giving Incentives for Beta Testing & User Research

Even a simple welcome message outlining these points can make a big difference. When testers know their role and the impact of their contributions, they’re more likely to engage meaningfully and stay committed throughout the process.

2. Choose the Right Beta Testers